What’s Actually on the New Google Cloud Professional Cloud Architect (PCA) Exam?

A lot of people have been wondering, and asking me, what's on the new Google Cloud Professional Cloud Architect exam. Google released this exam recently (October 30th 2025), to a lot of anticipation.

After all, in late 2024, Google had actually been running a beta version of the new Professional Cloud Architect exam. So we pretty much all knew that an update was coming. But usually when Google runs a beta version of an exam, the new, real exam gets released only a few months later. When that didn't happen by Spring of 2025, I was a bit confused. But here we are. The new exam is here.

The Overlap

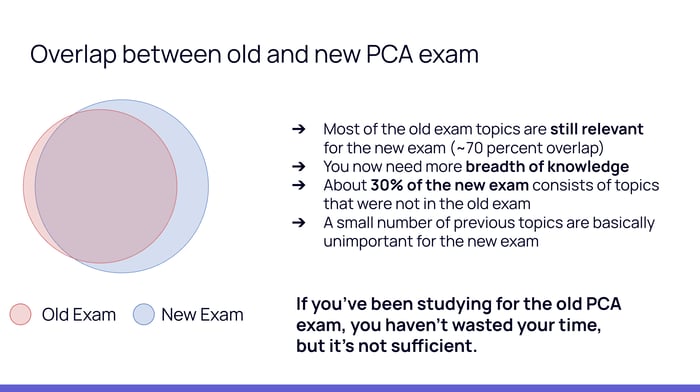

The good news is, most of the old exam topics are actually still relevant. I estimate there is a 70% overlap between the two versions. If you have been studying for the old version of the exam, and especially if you've been focusing on GCP bread-and-butter services, or foundational concepts, you have not wasted your time. However, the remaining 30% consists of topics that were not in the old exam at all.

However, you now need a greater breadth of knowledge. About 30% of the new exam consists of topics that were not in the old version at all. On the flip side, a small number of previous topics are now basically unimportant or have been deprioritized.

Tip #1: Study These Topics Less

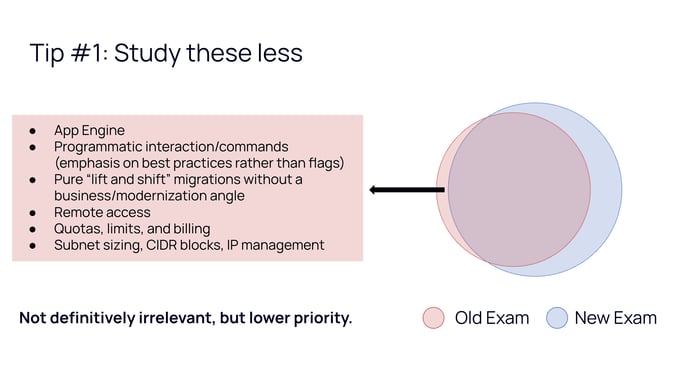

To study effectively, you need to know where to spend your time. These areas appeared regularly on the old exam but are much lower priority now:

App Engine: This used to show up frequently but is much less emphasized now. Google is encouraging people to use Cloud Run as the main serverless app deployment option.

Programmatic interaction: The old exam tested specific gcloud commands, flags, and syntax. The new exam focuses more on best practices and high-level decision-making.

Pure lift-n-shift: Migrating a VM to the cloud without a business or modernization angle is deprioritized. The new exam wants to see that you understand the business context and complicated modernization scenarios.

Remote access: Specific questions about remote access to VMs are much less of a focus now.

Quotas and billing: You do not need to know specific quotas, limits, and billing details nearly as much anymore.

Networking details: Subnet sizing, CIDR blocks, and IP management were more heavily tested on the old exam. The new exam emphasizes managed solutions and VPCs at a higher level of abstraction.

Tip #2: Study These Topics More

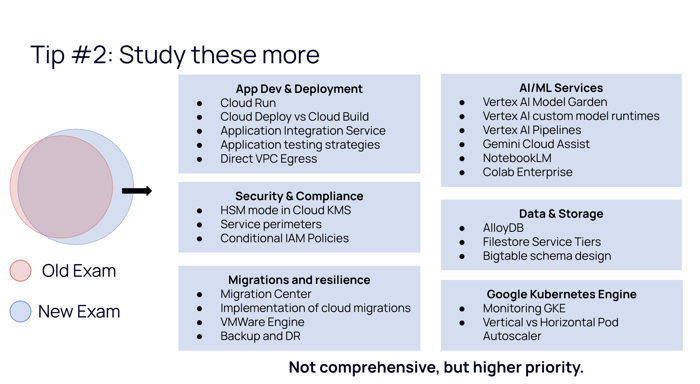

These are the areas that were either missing or not emphasized previously, but are now highly likely to show up:

App Dev and Deployment: Cloud Run is a major focus, along with Cloud Deploy for CD workflows. You should also know the Application Integration service for connecting applications and automating workflows. Understanding testing strategies, like unit, integration, load, and smoke tests, is now more important.

Networking: Direct VPC Egress is a newer feature that lets serverless services like Cloud Run connect directly to VPC resources.

Security and compliance: Be familiar with HSM mode in Cloud KMS and service perimeters via VPC Service Controls. Conditional IAM policies are also a newer addition to the exam.

AI and Machine Learning: This is a significant addition. You need a cursory understanding of Vertex AI Model Garden, custom model runtimes, and Vertex AI Pipelines for ML workflows. You should also know about Gemini Cloud Assist, NotebookLM, and Colab Enterprise.

Data and Storage: Focus on AlloyDB and the different service tiers for Filestore. Bigtable schema design is also important.

Migrations and Resilience: The Migration Center is a newer tool for assessing on-prem environments. You should also know about Google Cloud VMware Engine and the Backup and DR service.

Google Kubernetes Engine (GKE): Monitoring GKE health and performance is a bigger focus. You must also know when to use the Vertical Pod Autoscaler versus the Horizontal Pod Autoscaler.

Tip #3: Do Not Spend A Lot of Time on the New Case Studies

The exam introduces some new case studies as well.

- Altostrat Media - media company modernizing its GKE-based content platform with Gen AI for automated content analysis, personalized recommendations, and natural language interactions

Cymbal Retail - online retailer modernizing operations with Gen AI for automated catalog management, conversational commerce, and technical stack upgrades

EHR Healthcare - this is actually the same case study as was on the previous exam. Healthcare SaaS provider migrating from colocation to Google Cloud for scalable infrastructure, disaster recovery, and faster deployment of containerized EHR software.

Knightmotives (note: the link appears to be unavailable at the time of this writing)

These case studies represent realistic business scenarios where you'll need to apply architectural principles as if you were a consultant advising them as clients.

Google emphasizes these heavily, but I have a surprising tip. do not spend a lot of time on them.

That's because almost all case study questions can be answered without reading the case studies at all. They are testing whether you understand GCP services and architectural principles, not whether you have memorized a company's background.

Consider this question as an example.

You are part of the development team for EHR Healthcare's customer portal, which was recently migrated to Google Cloud. Since the migration, the application servers have been experiencing higher loads, leading to frequent timeout errors in the logs. The application uses Pub/Sub for messaging, and no errors have been logged for Pub/Sub publishing. To reduce the publishing latency, what should you do?

A) Increase the retry timeout settings for Pub/Sub

B) Switch from pull subscription to push model

C) Disable Pub/Sub message batching

D) Set up an additional Pub/Sub queue as backup

In this scenario, focusing on the specific business goals of EHR Healthcare is unnecessary because the technical challenge involves a fundamental architectural trade-off within Google Cloud Pub/Sub. The primary issue is the relationship between message batching and latency. Batching is a technique where multiple messages are grouped into a single publish request to improve efficiency and increase overall throughput.

While this strategy reduces request volume and maximizes the capacity to process high data volumes, it increases the latency of individual messages. This delay happens because the publisher client pauses to either reach a specific message count or wait for a set time window to close before sending the data. To specifically address a requirement for lower publishing latency, an architect must choose to disable or reduce these batching settings. This change ensures that messages are dispatched as quickly as possible without waiting for a batch to fill.

Tip #4: Just because a question mentions AI, that does not mean it is testing AI

As many people have noted, AI is more heavily emphasized in this version of the exam. Naturally, that leads people to think that AI will more often be the answer whenever it is listed among the multiple choice options.

However, that's not necessarily the case. Google still wants to see if you understand how fundamental knowledge still applies to new technologies.

Here's another example realistic question to illustrate what I mean.

You are running a recommendation system on Google Cloud that uses Vertex AI Feature Store to serve real-time features to your application. Your team needs to monitor the system to understand how quickly the application is responding to user requests from end to end. Which metric should you primarily monitor?

A) Throughput

B) Feature freshness

C) Error rate

D) Latency

The correct answer is D, latency.

The question asks which metric you should monitor to see how quickly the application responds to user requests, which is literally the definition of latency. Even though the question uses "AI" terminology, the answer is simply Latency. Latency is the measure of response time whether you are using a standard database or a cutting-edge AI feature store.

Google wants you to understand how fundamental knowledge and principles still apply to new technologies.

Tip #5: Watch Out for Fake Practice Tests

Now, tip number five: watch out for fake practice tests.

Here's an example of a prompt that looks legitimate at first glance.

Your company is migrating to Google Cloud. Your tech lead decides to implement automated backups, disaster recovery procedures, and monitoring alerts for all critical systems. Which pillar of the Google Well-Architected Framework does this primarily represent?

This question has a business scenario. It mentions the Well-Architected Framework, which is in the new exam guide. So thousands of people will see this and think it's a realistic practice question.

In reality, though, someone probably just put the exam guide into ChatGPT and asked it to generate practice questions. Something being on the exam guide doesn't tell you how it's going to be tested. The real exam will usually test architectural decisions instead of definitions. There are many ways a concept can be tested. They're probably not going to ask you to identify which pillar of a framework something represents. They're going to give you a scenario and ask you what you should do, testing whether you can apply those principles without explicitly naming them.

3 More Realistic Practice Questions

To give you a sense of the actual exam, let's walk through three realistic scenarios:

Your company is developing a healthcare analytics platform that must meet strict data residency requirements. All patient data must be stored and accessed only within Canada, and no data may be transferred or read from any other region. What steps should you take to ensure that the application meets these requirements? Select all that apply.

A. Use IAM conditions to restrict access to users connecting from Canadian IP ranges

B. Configure VPC Service Controls to define a secure perimeter that prevents data exfiltration from approved Canadian projects

C. Set up firewall rules to block all incoming traffic from outside of Canada based on geographic IP filtering

D. Apply an Organization Policy with resource location constraints to restrict where resources and data can be created

E. Configure Cloud Load Balancing to route all client requests to a backend service residing within the required Canadian region.

Take a moment to think about which of these would actually enforce data residency.

The correct answers are B and D. VPC Service Controls creates a security perimeter around your Canadian projects that prevents data from leaving those boundaries. That's a hard technical control against data exfiltration. And Organization Policy with resource location constraints ensures that resources can only be created in approved regions, so you can't accidentally spin up a database in the wrong location. The other options either control where users connect from or where traffic is routed, but they don't actually enforce where data is stored and processed.

Here's the next question.

Your organization is planning to migrate on-premises virtual machines and an SAP instance to Google Cloud. You need to quickly estimate the costs of running these workloads in the cloud before proceeding with the migration. What should you use?

A. Use the Google Cloud Pricing Calculator to manually estimate costs

B. Use Cloud Billing reports to project costs based on similar workloads

C. Use Migration Center to assess your on-premises environment and estimate costs

D. Use Migrate to Virtual Machines to generate cost estimates during the migration process

Take a moment to think about which tool is designed for pre-migration cost assessment.

The correct answer is C. Migration Center is built specifically for this scenario. It assesses your on-premises environment, discovers your workloads, and provides cost estimates for running them in GCP. Now, Migration Center is relatively new to GCP, especially its ability to estimate costs, it’s really only the last couple years that it’s been available for use in this way. Previously, the answer to this question probably would have been the Pricing Calculator, and you'd have to manually input everything even for migrations. But now there's a purpose-built tool for this. The Pricing Calculator still works, but it's time-consuming and error-prone when you're dealing with a full migration. Cloud Billing reports only show costs for resources you're already running. And Migrate to Virtual Machines is a migration execution tool, not a cost assessment tool. You'd use it during the actual migration, not for initial cost planning.

Here's another one.

You need to deploy a machine learning model from Vertex AI Model Garden, but it requires a custom prediction routine with specialized preprocessing logic. What should you do?

Model Garden is Vertex AI's collection of pre-trained models from Google and third-party providers that you can deploy without having to train your own models from scratch. So, it’s asking you how to deploy a model from the Model Garden that requires a custom prediction routine. What should you do?

A. Deploy the model directly from Model Garden using the one-click deployment feature available in the interface

B. Download the model from Model Garden and deploy it on a Compute Engine instance with your custom prediction environment

C. Export the model artifacts to Cloud Storage and use Cloud Run to serve predictions with your custom prediction routine

D. Create a custom container with the model and prediction routine, push it to Artifact Registry, then deploy to a Vertex AI endpoint

Take a moment to think about how you'd handle custom preprocessing logic with a Model Garden model.

The correct answer is D. When you need custom prediction logic with a Model Garden model, you create a custom container that includes both the model and your preprocessing routine, push it to Artifact Registry, and deploy it to a Vertex AI endpoint. This keeps everything within the Vertex AI ecosystem and gives you full control over the prediction pipeline. The one-click deployment in option A only works for standard serving without custom logic. Deploying to Compute Engine works but means you're managing infrastructure yourself instead of using managed services. And while Cloud Run could technically serve predictions, Vertex AI endpoints are purpose-built for ML serving and give you better integration with the rest of the Vertex AI platform.

Preparation materials

I hope this was helpful! Good luck to you if you are preparing. If you want fresh materials to give yourself the best chance of passing, you can check out my Professional Cloud Architect course.